Application of retrieval augmented generation for conversational interactions

Author: Virginia Kadel

In 2023, GEICO invited the tech organization to a hackathon designed to help us identify how and where LLMs could be used to improve business experiences. One of the event winners proposed a Chat application to conversationally obtain information from users instead of using web forms. The solution leveraged LLMs to enable a conversational interface that could dynamically ask questions to the user based on the state of the underlying data model, the conversation state and the progress towards a predetermined task. While exploring this idea, the team discovered that commercial and open-source LLMs were not infallible or reliably correct. LLMs responses can widely vary without guardrails or constraints. GEICO learned that prompt crafting and context enhancement would be required to create a more reliable experience.

Oh no, hallucinations...

Stochasticity, knowledge limitations, and update lags contribute to a critical issue known as hallucinations [3]. Hallucinations refers to generative models' tendency to generate outputs containing fabricated or inaccurate information, despite appearing plausible [1]. Hallucinations arise from the pattern generation techniques used during training and the inherent delay in model updates. The potential for unreliable or inaccurate outputs often raises concerns, especially in public-facing customer use cases.

Various approaches have been explored since the early days of LLMs to prevent hallucinations, including supervised fine-tuning, prompt engineering, and knowledge graph integration. Retrieval Augmented Generation (RAG) shows promise and is now a widely adopted solution [2] to minimize the risk of hallucination and to support application development that requires access to information beyond the model's internal knowledge. This blog describes some of the processes for an experimental RAG implementation and the insights gained.

What is RAG

Following the taxonomy proposed by Tonmoy, S. M., et al. [4], RAG can be categorized as a prompt engineering technique that aims to provide LLMs with access to authoritative external knowledge rather than relying solely on their internal knowledge base.

Why RAG and not fine tune?

Many practitioners use fine tuning as a last resort to combat hallucinations because models can be adapted to improve their outputs against a specialized task. We considered RAG as a first line of defense due to:

- Cost: Avoids expensive model retraining.

- Flexibility: Incorporates diverse external knowledge sources, updated independently.

- Transparency: Enables easy interpretability and attribution.

- Efficiency: Supports rapid knowledge access.

Our approach

The GPT model allows using a system prompt to provide context and instructions to perform a task. This is where the RAG context is injected for this implementation. For every interaction, a part of the conversation, the task description, and constraints are dynamically composed based on the quote process stage and the user intention.

For instance, if the user needs to provide an address as part of a process, the system composes and sends instructions to the LLM to generate a phrase that will elicit this information. If the user provides the address and asks a question, such as: "I live at .... can you pick up my dog from the vet?", in response the LLM must answer and continue to guide the user through the conversation process.

While this may seem straightforward for a human, it comes with added challenges for an LLM. The LLM might have encoded information related to the question asked, or it might randomly generate a response that aligns with the user's request. The answer can often be partially correct and make assumptions that are not true. For instance, the LLM might respond, "Yes, sure I can pick up your dog from the vet?" which may not be factually right but can appear to be correct.

Incorporating relevant information equips the LLM to provide answers that better align with more stringent expectations for consistency, reliability, and compliance.

How is RAG done?

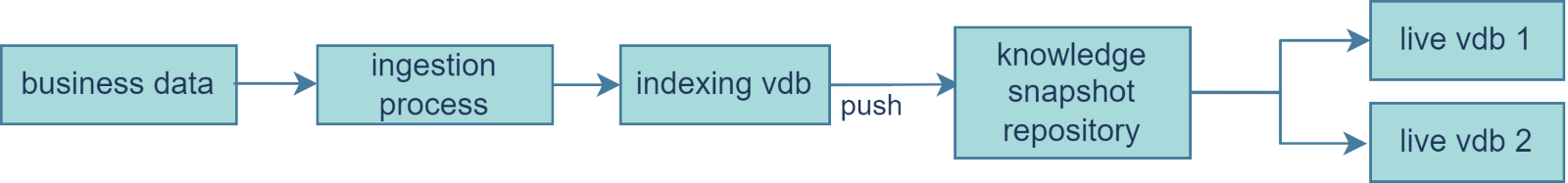

To support RAG functionality, we need to convert business data into their vectorized representations. We established a pipeline for RAG, converting dense knowledge sources into semantic vector representations for efficient retrieval [7]. The ingestion process encompasses splitting documents, converting each to embeddings through an API, then extracting metadata using LLMs. Once the records are split, they are sent along with the metadata and their vector to the database to be indexed.

Creating the multilayer data structure required for the vector indexing can be a resource-intensive mathematical operation, so we designed an offline asynchronous conversion. This approach aims to maximize Queries per Second (QPS) without the load of indexing during retrieval. As a result, we have a component for building collections and creating snapshots for the vector database, minimizing disruption and downtime of the component responsible for retrieval.

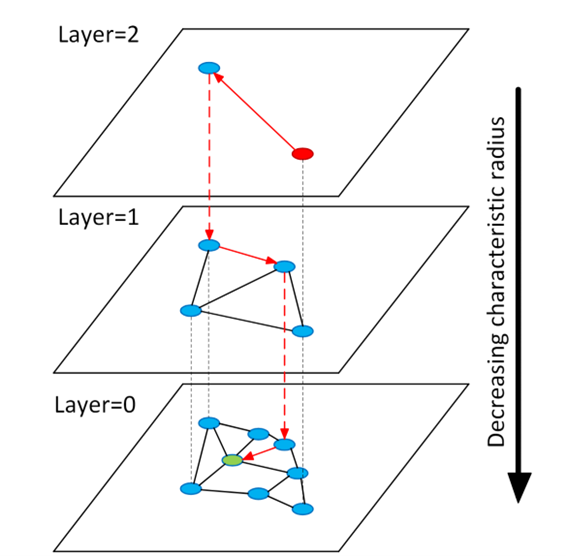

The process' success hinges on the indexing technique used for the non-parametric knowledge base. We evaluated various vector databases, finding Hierarchical Navigable Small World graphs (HNSW) superior [12]. HNSW, based on the k-nearest neighbors algorithm, removes the need for extra knowledge structures, offering efficient search for high-dimensional vectors compared to simple distance-based searching. Modern vector databases support additional indexing methods such as customizable metadata indexing, enhancing retrieval flexibility.

Figure 1 From [8] Hierarchical NSW idea, showing the search reduction during layer navigation.

Where is RAG added?

RAG is not a silver bullet, and it can bring its own challenges, particularly concerning the heterogeneity of input representation, as highlighted by Ji, Ziwei, et al. (2022) [6]. This issue is magnified because the RAG context is incorporated within the already heterogeneous system prompt. Setting clear context and instruction boundaries is crucial.

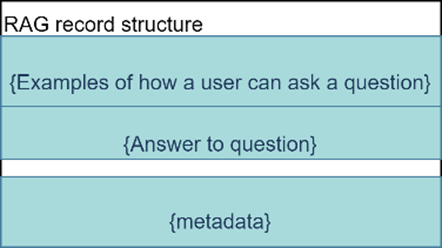

A robust system must retrieve relevant information considering its structure, tone, and syntax for improved content generation. For our initial RAG implementation, accurate retrieval depended on the semantic closeness of the vectorized representation to the user input. This involved encoding the question-answer set to align with user requests and preferred answers. This method is common in intent classification models where all likely inputs map to an expected output. The added context can be confused by the model as system-possible actions. Consider each record is composed of:

The first RAG implementation utilized the entire record within the system prompt, but this approach was ineffective and unreliable. A clear delineation of the record structure allowed for a swift shift to a more refined insertion approach that only included the answer, excluding the examples. This resulted in more focused outcomes.

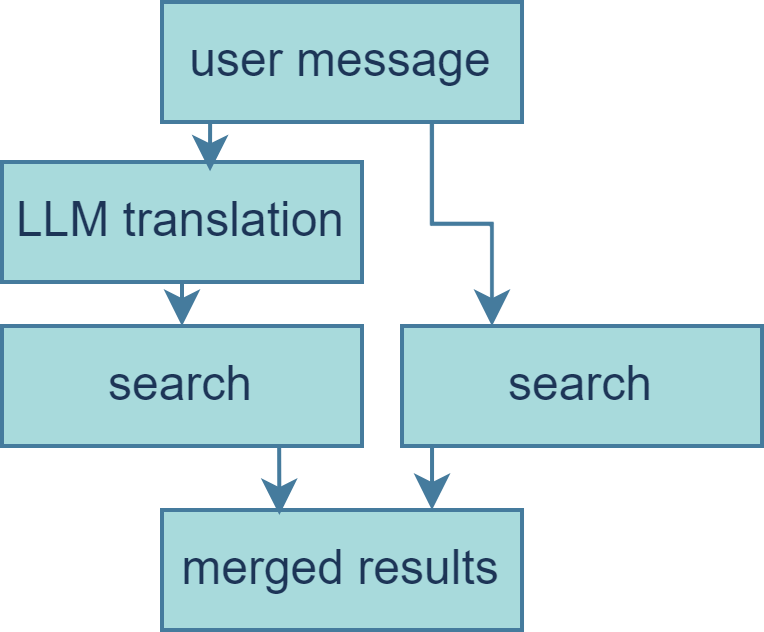

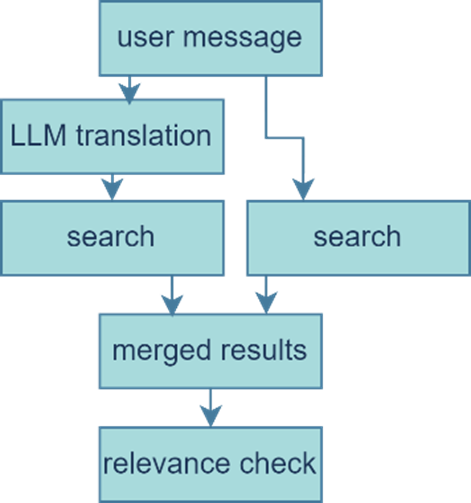

Despite this, irrelevant information was still included in the responses. Given that everyday language can often be fragmented, grammatically incorrect, and varied, every user message was sent to a LLM to translate the input into a form that could be matched within the knowledge base. This translated input was a coherent sentence that tried to predict the question the user was likely to ask, seemed to be asking, or might have wanted to ask, eventually resulted in two records sets retrieved. Both sets were then collected and combined for later insertion into the system prompt.

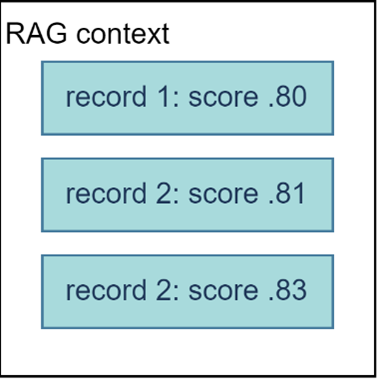

Adding more records sometimes yielded undesired results, so we drew knowledge from research that describes how LLMs can struggle to keep focus on retrieved passages positioned within the middle of the input sequence [11] and decided to implement ranking mechanisms. By reordering retrieved knowledge and prioritizing the most relevant ones at the beginning or end of the sequence, the LLM's focus window improved, leading to more coherent and on-topic generation. This added step ensured that the most semantically relevant knowledge was positioned at the bottom of the RAG context window.

When do we add?

Incorporating more context can lead to increased response variability and more costs. To address these concerns, we introduced a relevance check. We used the same LLM to evaluate the records' relevance to a part of the conversation. Developing a concept of relevance proved challenging and remains an area for improvement. We need to consider factors such as whether all the retrieved contexts are relevant if only portion of the context is applicable, or if the context is relevant without further transformations, or if it requires further inference, and finally, if not relevant, whether to redo the search differently.

I can do that for you!

Defining the LLM's persona as a helpful conversational agent works well for simple tasks but introducing RAG context can lead to false system capability assumptions. The model may "overpromise", presuming it can perform actions that it is not able to do. The term "overpromised" was coined by our product team to describe situations where the model presumed it could independently do an action related to the answer it was trying to generate. Even with identical inputs, the LLM's responses vary, with a consistent percentage being incorrect.

For example, a seamlessly innocuous user request such as:

User: Yes, I live alone. I have a question, is there a transaction fee if I pay my bill with a credit card?

Can result on the model responding:

LLM: Absolutely, I can help with that. There is no transaction fee if you pay with a credit card. It's a convenient way to manage your payments without any extra charges.

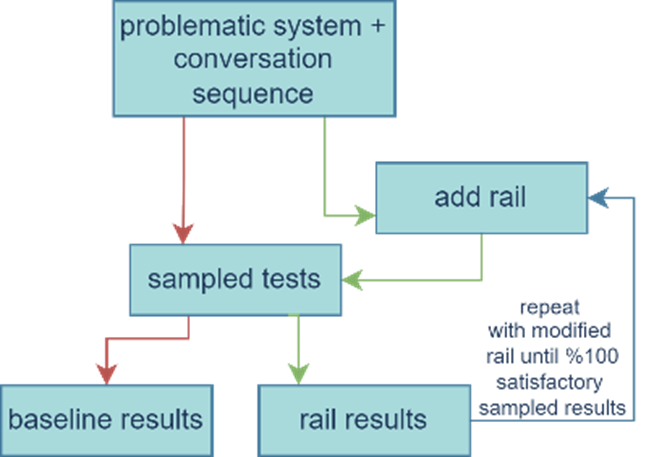

The response inaccurately implies the model can process payments. In this case, 12 out of 20 responses were incorrect during repeated testing. The regularity of these errors suggested a potential for correction but attempts to permanently add instructions to the system prompt were unsuccessful and disrupted other goals. We then included the instructions in the record shown to the model upon retrieval. We theorized this would increase adherence to instructions. This approach reduced the 12 errors to 6, and eventually to none after further adjustments, leading to our RagRails strategy.

RagRails involves adding guiding instructions to a record to aid the LLM. The additional context helps the model to avoid misconceptions and potential negative effects while reinforcing desired behaviors. RagRails is only used when the record is retrieved and relevant. We performed sample tests for consistency and evaluated responses based on LLM and developer effort to ascertain the suitability of the "railed" response. The key to this method is the repeatability of the test, as a positive result may mask future undesirable outcomes.

The cost we pay:

Maintaining our current path may result in higher inference costs due to the additional information provided to the model. However, the dependable and consistent application of Language Models should be prioritized for specific scenarios that require a high degree of truthfulness and precision. To lessen the financial burden of this process, one choice is to use smaller, more finely tuned models for specific tasks such as optimization, entity extraction, relevance detection, and validation. The LLM could then serve as a backup solution when these smaller models are insufficient.

Conclusion

At GEICO Tech, we continue to explore RAG and other techniques as we explore how to use generative technologies safely and effectively, learning from our associates, the scientific, and the open-source community.

Summary

- Working with Large Language Models (LLMs) often involves dealing with hallucinations. "Overpromising" is a subset of hallucinations where the model makes incorrect assumptions about its own capabilities.

- Retrieval Augmented Generation (RAG) is a proven solution that is cost-effective, flexible, transparent, and efficient. It requires pipelines to convert dense knowledge sources into semantic vector representations for efficient retrieval.

- To minimize hallucinations, it's crucial to implement sorting, ranking, and relevance checks for the selection and presentation of knowledge content.

- When more context is added, the risk of hallucination may increase. RagRails proved to be effective in reducing undesired model responses for the "overpromising" problem encountered during our experimentation.

References

- Liu, Hanchao, et al. "A survey on hallucination in large vision-language models." arXiv preprint arXiv:2402.00253 (2024).

- A Comprehensive Survey of Hallucination Mitigation Techniques in Large Language Models.

- References Chatgpt: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet of Things and Cyber-Physical Systems, 3:121-154.

- Tonmoy, S. M., et al. "A comprehensive survey of hallucination mitigation techniques in large language models." arXiv preprint arXiv:2401.01313 (2024).

- Peng, Baolin, et al. "Check your facts and try again: Improving large language models with external knowledge and automated feedback." arXiv preprint arXiv:2302.12813 (2023).

- Ji, Ziwei, et al. "RHO: Reducing Hallucination in Open-domain Dialogues with Knowledge Grounding." arXiv preprint arXiv:2212.01588 (2022).

- Lewis, Patrick, et al. "Retrieval-augmented generation for knowledge-intensive nlp tasks." Advances in Neural Information Processing Systems 33 (2020): 9459-9474.

- Malkov, Yu A., and Dmitry A. Yashunin. "Efficient and robust approximate nearest neighbor search using hierarchical navigable small world graphs." IEEE transactions on pattern analysis and machine intelligence 42.4 (2018): 824-836.

- Mündler, Niels, et al. "Self-contradictory hallucinations of large language models: Evaluation, detection and mitigation." arXiv preprint arXiv:2305.15852 (2023).

- Yan, Jing Nathan, et al. "On What Basis? Predicting Text Preference Via Structured Comparative Reasoning." arXiv preprint arXiv:2311.08390 (2023).

- Liu, Nelson F., et al. "Lost in the Middle: How Language Models Use Long Contexts. CoRR abs/2307.03172 (2023)." (2023).

- Rackauckas, Zackary. "RAG-Fusion: a New Take on Retrieval-Augmented Generation." arXiv preprint arXiv:2402.03367 (2024).